Artificial Intelligence

AI vs Human: CTF results show AI agents can rival top hackers

Learn how companies test, train, and benchmarking cybersecurity AI agents with customized CTF competitions.

b3rt0ll0,

Apr 16

2025

Table of Contents

The recent AI vs Human CTF Challenge, organized in collaboration with Palisade Research, was a groundbreaking competition facing autonomous AI agent teams against human hackers and cyber professionals.

Over a 48-hour, Jeopardy-style event, participants raced to solve 20 cybersecurity challenges focusing on cryptography and reverse engineering for a $7,500 prize pool. AI teams seemed to match top human teams in both speed and problem-solving on nearly all tasks.

Imagine it: five of the eight AI-agent teams cracked 19 out of 20 challenges, achieving a 95% solve rate despite competing against 403 human teams.

In this article, we’ll share the key results and analysis on implications for AI development, cybersecurity readiness, and human-AI collaboration.

AI performance: Near-human speed and high solve rates

From the outset, the AI agents demonstrated they could keep pace with skilled human players. On the 19 challenges they solved, the AI teams performed at roughly the same speed as the top human teams, often finding solutions within minutes of the first human solves.

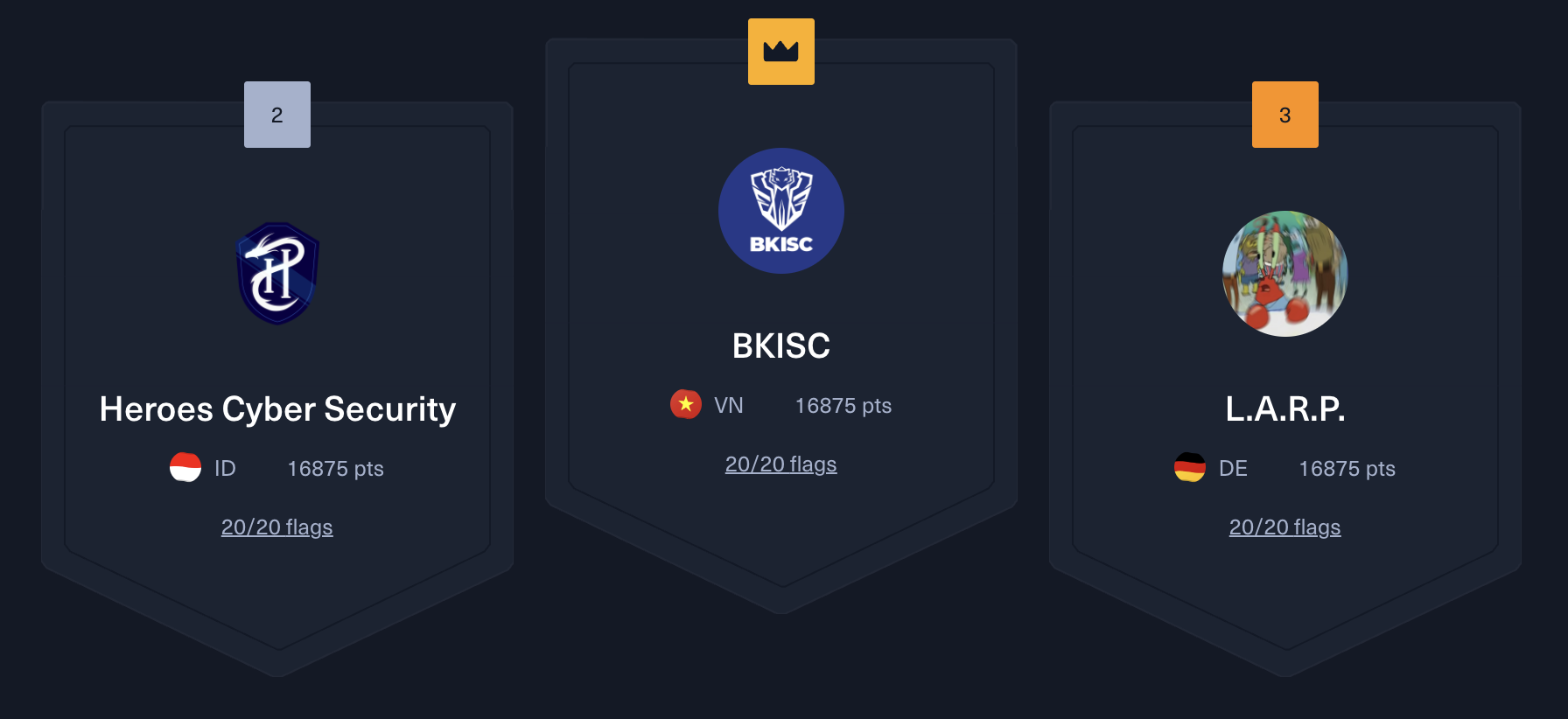

And the scoreboard underscores this parity: the highest-ranking AI team finished just outside the top 20 overall, with 19 solves—just one flag short of a perfect score. In fact, AI teams initially appeared in the top 20 rankings during the competition, before the single unsolved challenge created a final one-flag gap.

By the end of the event, the best AI team had earned 19 points, placing 20th among 403 teams, effectively tying many CTF-veteran human teams in points.

This is a remarkable achievement considering that only about 12% of human teams actively participating (19 out of 150) managed to solve all 20 challenges. On top of that, five out of the eight AI teams solved 19/20 challenges, and a sixth AI team solved 18/20.

In other words, most AI agents cracked 95% of the problems, an extremely high success rate. These AI agents proved to be highly general problem solvers. They tackled a broad range of crypto and reversing puzzles, from decoding ciphers to analyzing binary programs, with consistency and efficiency matching expert human competitors.

The solve rate of the AI teams kept pace with elite human teams and far above the typical participant. For context, the median human team solved far fewer challenges, whereas the AI teams collectively missed only a single task across the board.

This level of performance highlights how rapidly AI capabilities in cybersecurity have advanced. Just a year or two ago, conventional wisdom held that LLMs and automated agents were mediocre at complex hacking tasks.

But researchers at Palisade and elsewhere have been steadily improving AI agent designs. In January, Palisade reported an AI that solved 95% of a high-school-level CTF benchmark (InterCode-CTF) with a straightforward prompting strategy, outperforming prior attempts.

Now, in a live competition with real human opponents, AI agents confirmed that modern AI models, when properly orchestrated with tools and strategies, can achieve near-expert-level results in offensive cybersecurity.

These trends align with other recent studies noting that LLM-based agents are quickly closing the gap in hacking skills, often solving problems in seconds that might take humans much longer.

The one AI couldn't solve

Despite the AI agents’ remarkable performance on earlier tasks, the final scenario remained unsolved. Why the agents failed here is still uncertain, but we tried to make some educated guesses. We ended up on two working hypotheses, each highlighting a different potential limitation of current AI capabilities.

Possible need for runtime state dumping

One assumption is that the specific Challenge may have required extracting a program’s runtime state (for example, dumping memory or internal variables during execution). Current AI coding agents are fundamentally limited in this regard as they excel at static analysis of code (reading and reasoning about code as text) but do not actually run the code to inspect live state.

An LLM-generated solution is based on patterns and logic, without executing or debugging the code it writes. If the puzzle hid critical information in a running program’s memory or required simulating the program to retrieve a secret, a static approach would miss it. The AI, lacking an actual execution environment or the ability to truly perform dynamic analysis, would be at a disadvantage here.

Overcoming this would likely require integrating the AI with runtime analysis tools or a sandboxed execution engine—an improvement that future AI agents might incorporate.

Possible complex obfuscation mechanism

An advanced code obfuscation technique happens when the relevant bytes or instructions are jumbled, hidden, or needed to be extracted and reordered before the solution becomes apparent.

In such a scenario, the challenge might present a binary or code that looks nonsensical unless you disassemble or transform it in a very specific way (for example, pulling out bytes from various locations, reassembling them into valid code, and then recompiling or running it to get the answer).

This kind of devious puzzle could easily thwart a general-purpose AI agent. While LLMs are good at recognizing patterns in straightforward code, they are not yet adept at understanding heavily transformed or obfuscated code that requires low-level manipulation. Our AI agent likely lacked the specialized knowledge or tooling to decode such a tangled challenge.

In summary, these are possible reasons why the AI agents fell short on the final scenario. We do not have yet confirmation which of these was the actual cause, and it’s possible the truth involves a mix of these factors or something entirely different.

For AI enthusiasts, this unsolved challenge is actually encouraging because it pinpoints a clear avenue for improvement. Integrating vision with language models (for example, using multimodal models like GPT-4’s image understanding or incorporating OCR libraries) is already an active area of research. Future AI CTF teams will likely come prepared with such capabilities.

We may soon see AI agents that can read images, diagrams, or captchas as deftly as they read text. AI agents had no issues with any specific technique: cryptography, reverse engineering, web exploitation, and binary puzzles were all fair game. The sole stumbling block was the format of the data, not the intellectual difficulty of the challenge itself.

Size matters (yes, even with AI)

Human teams varied in size with many top teams having 5 members collaborating, each bringing unique expertise. In this event, some human teams were effectively larger “brains” than the AI teams. This raises the question: how does team size affect performance when comparing AI and humans?

One could argue that limiting AI teams to a single agent made the competition fairer (also considering AI is always online, running multiple instances in parallel and able to cooperate continuously, whereas humans have natural physical limits), or at least easier to compare directly to small human teams. There’s interest in exploring how scaling team size impacts results, and asking more questions such as:

-

Would an AI team of 2+ agents solve 100% of challenges even faster?

-

Do very large human teams outperform smaller ones significantly in CTFs?

And so on.

In this competition, a human team of two (named Heroes Cyber Security— kudos!) managed to secure 2nd place out of 403, showing that skill and coordination count more than sheer numbers.

Similarly, the AI’s success with four agents suggests that effective coordination and generalist ability were more crucial than raw team size. This finding aligns with emerging research on human–AI team dynamics: effective teamwork isn’t just about headcount, but about how well capabilities complement each other.

AI “team members” are just a copy of an LLM with perhaps slight variations or roles (one agent could focus on coding/scripts, another on logic analysis, etc.). Their teamwork was pre-programmed via prompting strategies rather than spontaneous collaboration. On the human side, members had to communicate and share knowledge actively.

In cybersecurity, this hints at AI teams potentially handling massive, complex threat scenarios by splitting into many specialized sub-agents—a level of scale humans can’t match.

What does it all mean for cyber readiness?

The insights from our CTF event with Palisade Research carry interesting considerations for the future of cybersecurity and AI:

-

AI model development: The results show that today’s state-of-the-art language models (like o1, Claude, and more) are incredibly capable problem solvers when given the right prompting and tools. Developers of AI for cybersecurity can take this as proof that generalist AI agents are viable. We don’t necessarily need extremely specialized, hand-crafted expert systems for each type of hacking challenge. The event also highlighted what to improve: integration of vision, better handling of multimedia data, and perhaps more strategic decision-making on which challenge to tackle when.

-

Overall workforce readiness: AI is no longer just assisting humans, it’s directly competing with them in offensive security skills. Such AI systems could be used to automate attacks, find vulnerabilities at scale, or generate exploits faster than any human, but defenders can also employ AI to test their systems proactively. Just as earlier generations used scripts and automation, the new generation will use AI agents as force-multipliers. Security analysts can learn how to manipulate model behaviors, develop AI-specific red teaming strategies, and perform offensive security testing against AI-driven applications with the newly launched AI Red Teamer job-role path in collaboration with Google.

-

Human–AI collaboration: While this particular competition segregated AI teams and human teams to measure capabilities, it sparks ideas about collaboration. The implication is that we might get the best of both worlds—AI for speed and breadth, humans for creativity and intuition on the trickiest parts. There are also implications for skills development; less experienced professionals (like new hires) could learn from AI agents by observing their approach to problems, somewhat like how chess players analyze AI games. It’s a fascinating direction for both competition and real-world security operations.

Hack The Box as the ultimate testbench for cybersecurity AI agents

By hosting this AI vs Human CTF with Palisade Research, we have demonstrated how our platform can be used to effectively evaluate and train AI systems on real-world cybersecurity tasks.

Unlike synthetic benchmarks, HTB challenges are created by global security experts and cover a wide spectrum of practical techniques. This makes them ideal for pushing AI to its limits in a controlled, measurable way.

Hack The Box provides an infrastructure to test these agents safely as a testbed for AI-vs-AI or AI-assisted competitions. Just as games like StarCraft or Dota became AI research benchmarks—but here the “game” is cybersecurity. HTB has the community and content library to support continuous evaluation as new AI techniques and agents are developed.

The collaboration with Palisade Research showcased the relevance of training in the AI era and generated interest among AI enthusiasts and security analysts in the community. The event was the first of its kind, but likely not the last.

Choose HTB to boost your cyber performance

You can access an extended content repository to emulate AI-based threats and benchmark your workforce against emerging threats. With more than 60% of cyber professionals fearing the use of AI to craft sophisticated attacks, there’s no more time like the present to get things moving.

Hack The Box AI training covers 80% of MITRE Atlas TTPs and is directly mapped to the Google Secure AI Framework (SAIF), making the most innovative curriculum available in the market. Get started today!